There’s no denying that society is trending towards an autonomous future. From delivery robots to drones to self-driving cars and everything in between, the tech industry is hyper-focused on developing solutions that make our lives easier and more convenient.

Navigation plays a huge role in this development, as most autonomous products involve getting a person or object from one place to another without any human effort. Global Navigation Satellite Systems (GNSS) rely on a constellation of satellites and receivers to establish geographic location data which can generate positioning that is either absolute or relative.

This article will take a look at the debate over GNSS absolute positioning and GNSS relative navigation, a divisive topic in the tech community. While no one doubts the importance of powering robots, drones, or AVs with navigation capabilities, two different schools of thought exist on how exactly to do so.

Generally speaking, today’s autonomous tech developers tend to favor either absolute or relative positioning – and have VERY strong feelings about it.

Absolute vs Relative Navigation

Before we get into the debate, let’s define these two types of navigation and get to know how they generate positioning data.

Absolute Navigation

Absolute navigation is the method of determining where you are and where you are going using precise geospatial coordinates. Essentially, every object has a geographic location on Earth’s surface, and those location coordinates are used to navigate between different places and objects.

Consumer mapping applications are an example of absolute navigation; if you type in two addresses, a specific route will be calculated based on the geographic coordinates of those places and the locations of road networks connecting them.

GNSS Absolute Positioning

GNSS achieves absolute positioning by leveraging signals transmitted from satellites orbiting the Earth to determine an object’s precise coordinates.

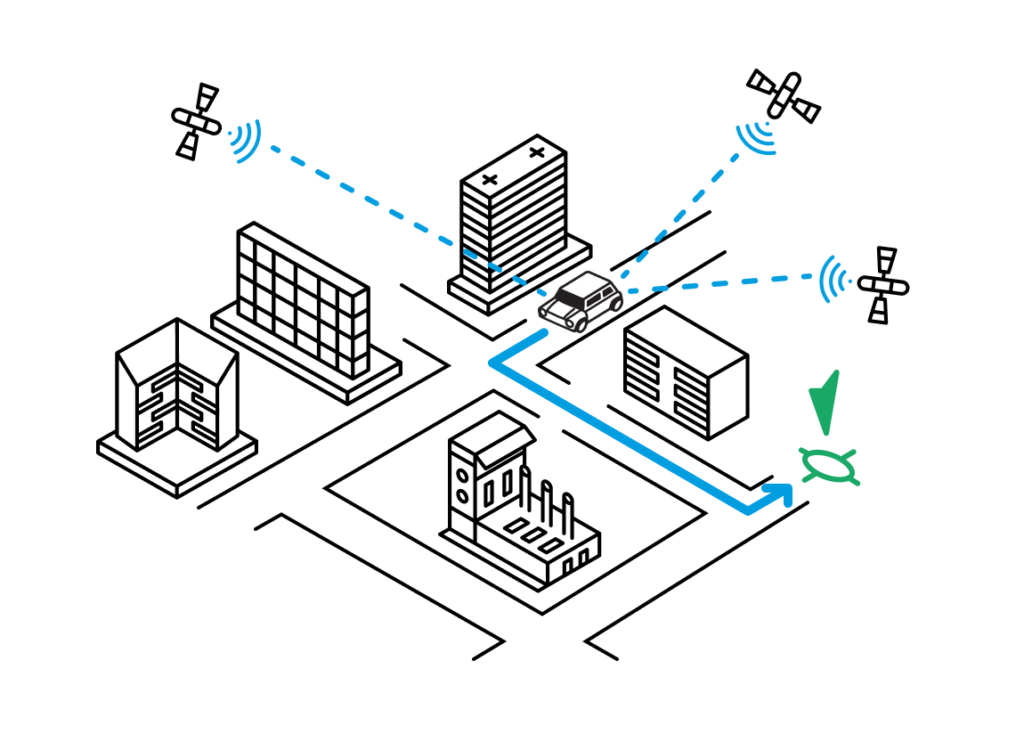

By receiving multiple signals simultaneously, a GNSS receiver can trilaterate its position by calculating its distance from each satellite based on signal travel time. These calculations enable the receiver to determine its absolute position in terms of latitude, longitude, and altitude, providing accurate location information regardless of the receiver’s initial reference point or starting position. Absolute navigation relies on precise location coordinates to determine the best route.[/caption]

Absolute navigation relies on precise location coordinates to determine the best route.[/caption]

Relative Navigation

Relative navigation involves using perception of immediate surroundings to get from one place to another, and is typically best for obstacle avoidance. This is how humans navigate in the world; for example, if you are walking around in your home in the dark, you will rely on your own knowledge of where furniture is located, as well as anything you can see or feel around you.

GNSS Relative Positioning

GNSS achieves relative positioning by comparing the positions of multiple receivers relative to each other rather than determining absolute positions with respect to the Earth’s surface.

Two common techniques for computing relative positioning are Real-Time-Kinematic (RTK) and Differential GPS (DGPS), which both involve two receivers (one fixed and one mobile). There are a number of RTK systems out there revolutionizing agriculture, delivery logistics, construction, drone surveying and mapping, and more.

The fundamental framework is centered around a base station – a receiver with a known, fixed position – that continuously receives signals from GNSS satellites. Meanwhile, the rover simultaneously receives signals from the same satellites and compares them with the corrections received from the base station in real time.

By applying these corrections to the rover’s measurements, RTK achieves centimeter-level accuracy in relative positioning between the base station and the rover.

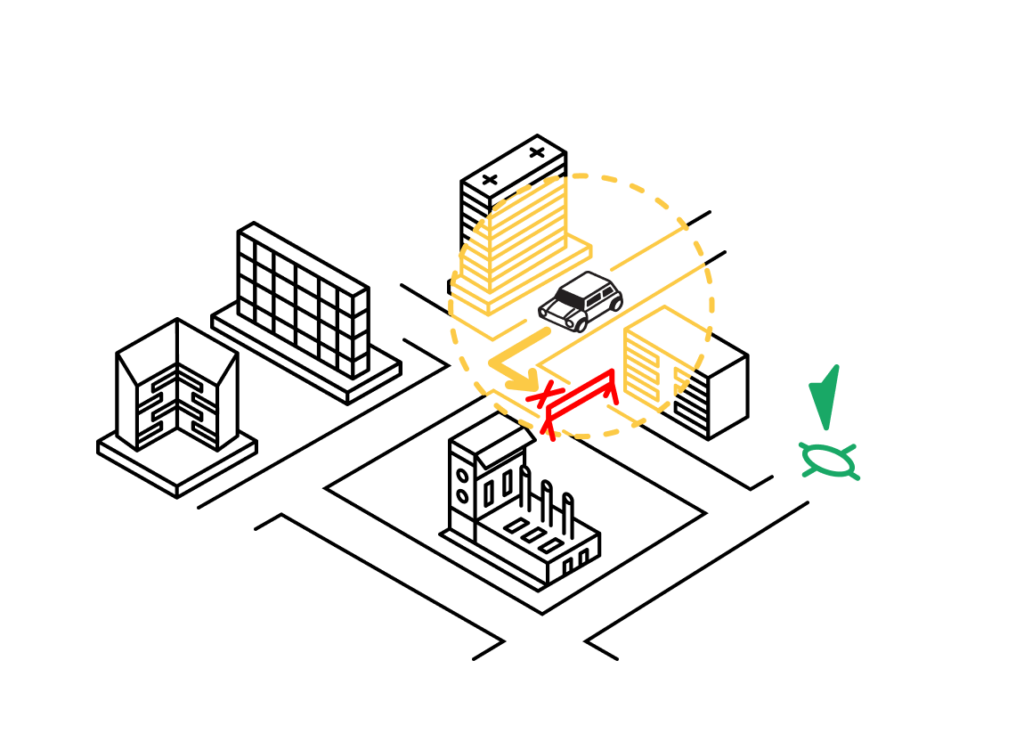

Relative navigation relies on sensing nearby objects to determine the best route.

The Abosolute Navigation vs Relative Navigation Debate

There are benefits and limitations to each of these navigation methods, making them more or less ideal for some applications than others. For situations that demand accurate coordinates of a point on the Earth’s surface, independent of external reference points, GNSS absolute positioning techniques will be better suited. In other applications though, relative positioning will provide more useful data.

When it comes to developing autonomous navigation systems, we strongly believe that the only way to minimize risk and maximize precision is to combine the best of both absolute and relative positioning capabilities.

If you’re familiar with this space, you probably already have an opinion on the relative vs absolute navigation debate. To show you why just one method is not enough, we’ll break down what an autonomous solution could look like in each of these three scenarios: powered by absolute location, powered by relative position, and powered by just the right combination of the two.

The case for absolute positioning

Let’s start with absolute positioning. In theory, this is the perfect solution. Computers have perfect recall, meaning they can store and retrieve the geographic coordinates of any person, place, or object. These coordinates can hypothetically be recorded for everything in the world, generating an absolute frame of reference for all possible destinations and obstacles.

Humans could never remember the exact coordinates of everything in the world, let alone calculate the precise degrees and directions of movement required to navigate amongst them. Supporters of GNSS absolute positioning say we should take advantage of this dichotomy and allow computers to do what humans cannot.

But is anything ever really perfect? No. Geospatial data is incredibly difficult to keep up-to-date with our dynamically changing world, so no true absolute frame of reference exists at any given moment.

Sure, there are datasets that come close using the latest in GNSS positioning tech (including technology like dead reckoning and NTRIP) but they are not equally distributed throughout the world.

The truth is navigation and positioning still require some level of nuance. Take, for instance, an AV driving down a road. Even though it’s equipped with location data representing the lines on the road, the bike lane next to it, and the trees just beyond the curb, it does not have the coordinates of the vehicle that just abruptly stopped five yards ahead. In this instance, a human or system perceiving the stopped car would be better able to avoid a collision.

The case for relative navigation

So if perception and reasoning are superior to absolute navigation, then shouldn’t we only develop autonomous solutions using relative location?

Not necessarily. While computers have perfect recall, they still do not have the level of fidelity in interpreting the environment that humans do. Computers may surpass humans in many things, but contextual interpretation is not one of them (yet).

There are a few reasons why computers cannot decode the real physical world with the same reliability as humans. Our world is not only changing incredibly fast, but human perception is also highly localized based on the varying geography of different places. Weather is another great example of dynamic conditions that require perception-based judgment.

There’s no way for a computer to anticipate and plan for every possible real-world scenario, so precise location alone cannot provide all of the answers. That being said, signal inaccuracies and other sources of error are inherent to GNSS relative navigation and must be addressed via advanced correction methodologies. Moreover, when it comes to encountering situations with no known reference points to contextualize, relative navigation has some significant limitations.

Let’s imagine the AV again. The vehicle’s relative navigation system uses the double yellow line to determine how to stay in its lane, but then an intense fog rolls in that completely obscures any road markings.

Without precise geographic coordinates representing the double yellow line, the vehicle does not have a way to perceive its own location or stay in the appropriate lane.

The reality: we need both absolute & relative navigation for autonomous positioning solutions

All of this means that in the great debate over relative vs absolute positioning solutions, no one is really 100% in the right – or the wrong. There are definite benefits and limitations to each method and one alone may be sufficient depending on your use case.

However, the high-stakes nature of autonomous navigation means we cannot simply accept the drawbacks of one solution without trying to mitigate them with the other. That’s why we argue that the future of autonomous navigation lies in drawing upon both GNSS absolute and relative positioning techniques.

The reality is autonomy is not binary, and safety is a gradient. Everything in autonomous tech development should be thought about in terms of allowable risk.

Redundant navigation systems allow us to reduce risk in scenarios that would be too dangerous to proceed in using just one system alone. The trick is optimizing both absolute and relative positioning to produce the most safe and most autonomous system possible.

We need to introduce human- or computer-based countermeasures for situations when one navigation system fails. The probability conditions of GNSS absolute and relative navigation help each system make critical decisions, but their combined joint probability is always going to be greater than that of either method on its own.

In other words, AVs, robots, drones, and other tech leveraging autonomous navigation should utilize the best of each method, optimizing for safety, accuracy, and the highest degree of autonomy possible.

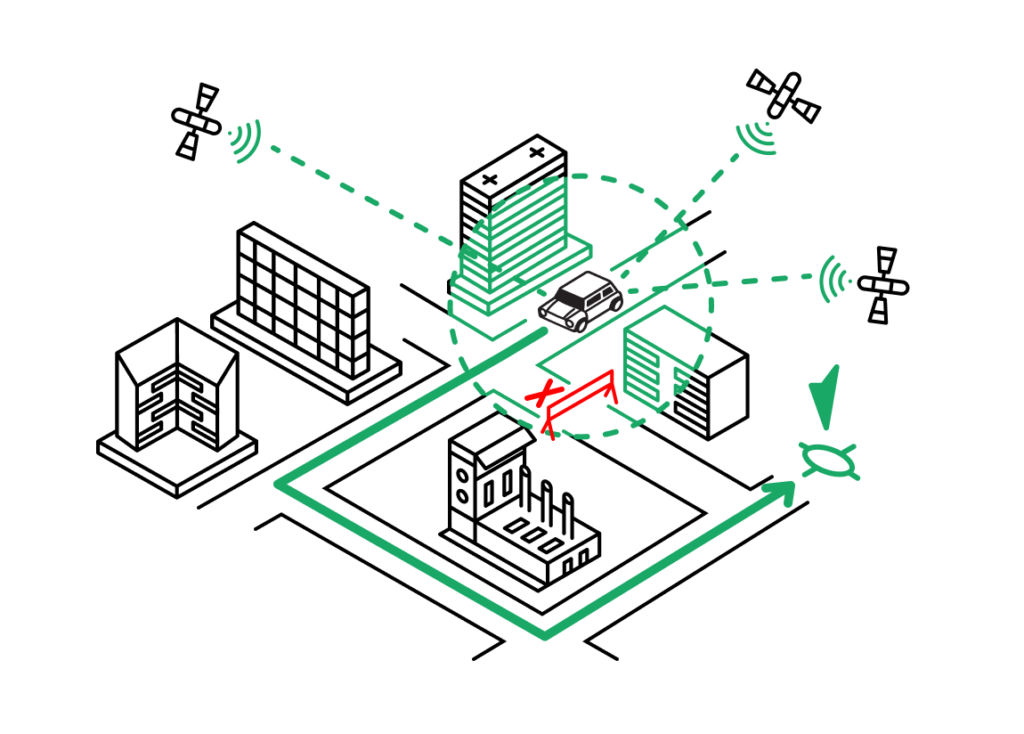

The safest and most accurate way to navigate around the Earth is to combine relative and absolute positioning.

Combining absolute & relative navigation in GNSS technology

At this point, you’re probably thinking that this all sounds great in theory, but is next to impossible in practice. Configuring and maintaining just one navigation system is resource-intensive, let alone two.

However, new innovations in GNSS technology are changing the game for autonomous navigation developers, making it not only possible to leverage the strengths of both absolute and relative navigation–but also preferable.

AVs and other autonomous tech are almost always already equipped with the basic hardware needed to enable redundant navigation systems.

GNSS sensors are ideal for powering absolute navigation, and calculating precise positioning based on its existing reference frame of location coordinates; cameras serve as relative navigation sensors, using a visual odometry pipeline to perceive its surroundings and influence movement.

When these systems are run simultaneously, their signals can be analyzed for any discrepancies, and the autonomously navigating object can use logic to determine which to rely on in a particular scenario. The data collected through relative positioning can even then be used to inform the absolute reference frame, continuously improving the overall navigation system and keeping it up-to-date with real-world change.

Using our previous example of an AV encountering an abruptly stopping car and foggy conditions, let’s see how redundant absolute and relative systems can work together for optimal autonomous navigation. When the vehicle five yards ahead stops unexpectedly, the relative sensors can overpower the absolute navigation system to inform the AV to also stop.

When the AV encounters a foggy area that obstructs the relative navigation system, the reference frame of the absolute system can take over to ensure it stays within the known lanes.

You may be thinking of all the ways these systems can contradict each other. What if the car abruptly stops in the fog, obstructing the relative navigation sensors, but is still not represented in the absolute reference frame?

That’s a valid point – like we said earlier, nothing is perfect. But the combined probability of absolute and relative navigation systems is always going to be more resilient than either system working perfectly on its own.

So why isn’t this the status quo in autonomous navigation? Well, it should be, but many developers struggle to source the right tech to power redundant navigation systems.

While there’s a wide variety of reliable, open-source relative navigation solutions available today, building a universal reference frame for absolute positioning remains a challenge.

The Most Accurate GNSS Positioning with Absolute & Relative Navigation

At Point One, we’re creating the most precise and accessible RTK network to power GNSS positioning around the globe. Our Atlas INS leverages this best-in-class corrections service along with tightly coupled sensor fusion to achieve centimeter-level accuracy positioning data in an absolute frame, bringing us ever closer to the elusive universal reference required for true absolute navigation.

We recently combined this tech with open-source relative navigation solutions to show how easy it is to globally and locally reference LiDAR data (spoiler – it only took an afternoon of work).

We hope we’ve convinced you to abandon your previously held opinions on absolute and relative navigation. To get started optimizing your own autonomous product’s navigation system, check out our dev kits.

More About GNSS Absolute & Relative Positioning

Let’s answer a few top questions about absolution positioning and relative positioning.

What is the GNSS positioning concept?

Global Navigation Satellite Systems determine the precise geographic location of a receiver on or near the Earth’s surface using signals transmitted from satellites in space. This technology enables a wide range of applications, including navigation, mapping, surveying, and timing synchronization, by providing accurate positioning information to users worldwide.

Can GPS measure absolute position?

Yes, GPS measures absolute position by receiving signals from multiple satellites in space and using trilateration techniques to calculate the receiver’s position based on the time it takes for signals to travel from the satellites to the receiver.

What is the difference between GPS and GNSS positioning?

GPS is a specific system within the larger umbrella of GNSS, which encompasses multiple satellite navigation systems operated by different countries and organizations. GNSS positioning utilizes signals from multiple systems for determining precise location coordinates, while GPS specifically refers to the system developed and operated by the United States.

What are absolute and differential positioning in GPS?

Absolute positioning in GPS determines the precise location of a receiver independently – that is, without reference to another point or baseline – while differential positioning compares measurements between a rover and a known base station. Differential positioning can significantly enhance the accuracy of GPS positioning.

What is the difference between relative positioning and differential GPS?

Relative positioning and differential GPS (DGPS) are both methods used to improve the accuracy of GPS positioning. Relative positioning compares the measurements of two or more GPS receivers to compute relative distances or positions, while DGPS corrects GPS measurements using data from a known reference station.

What are the positioning modes of GNSS?

The positioning modes of GNSS (Global Navigation Satellite Systems) refer to different methods used to determine the position of a receiver on or near the Earth’s surface. The four primary positioning modes are Autonomous, Differential, Real-Time Kinematic, and Precise Point Positioning.

What is the difference between relative and absolute position?

Relative position is measured in relation to another object or point, while absolute position is measured according to a coordinate system, providing a unique location independent of other objects.