In just one afternoon, the Point One team built a scalable technical demonstration for globally georeferencing LiDAR scans without stitching, showcasing how high-precision GNSS solutions can develop a universal reference frame for global mapping at scale. So why are developers still doing things the hard way by data stitching without GNSS?

Challenges in LiDAR Data Interpretation & Global Referencing

Despite advancements in geospatial technology, translating local positioning information into globally georeferenced features remains a challenge for developers. The need to stitch together disparate LiDAR scans into a cohesive map still often requires the development of a tech stack for accurate data interpretation and referencing – a time-consuming process for developers who want to build quickly and focus on their main objectives. At the same time, developers need to integrate HD maps to stay competitive and create truly innovative solutions to some of the world’s most complex problems. This dynamic is forcing many developers to choose between quality and efficiency as they build products and applications with LiDAR data.

Local referencing of LiDAR data is sufficient for mapping tools where ‘close enough’ is good enough, such as consumer navigation applications that help individuals find directions to a storefront. But this approximation is inadequate for producing HD maps that can power exciting new developments in autonomous vehicles, robotics, and other solutions requiring high precision. For AVs and similar devices to navigate safely and accurately in the real world, they need to be built with seamless, globally referenced data.

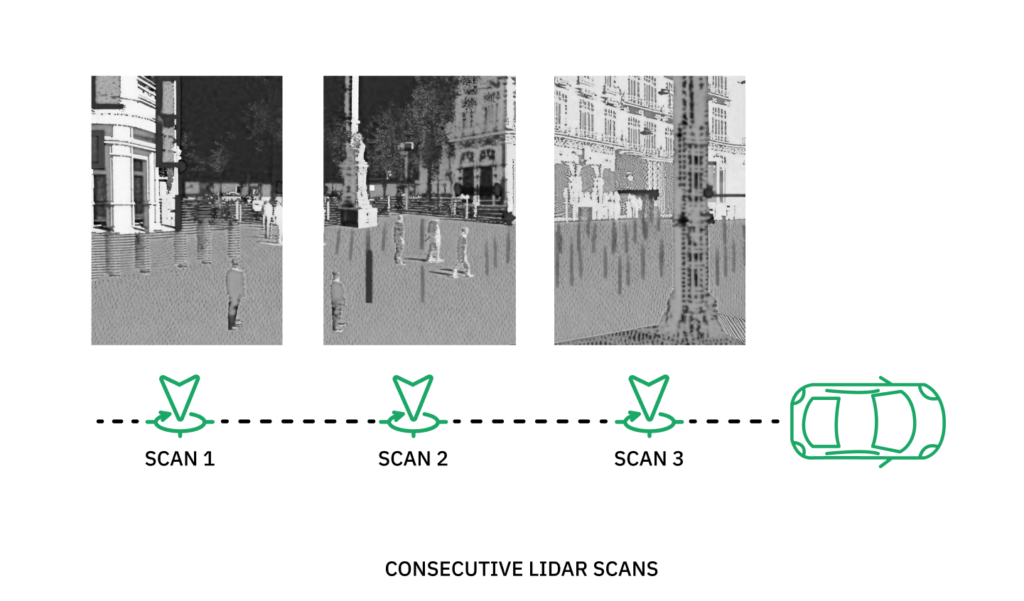

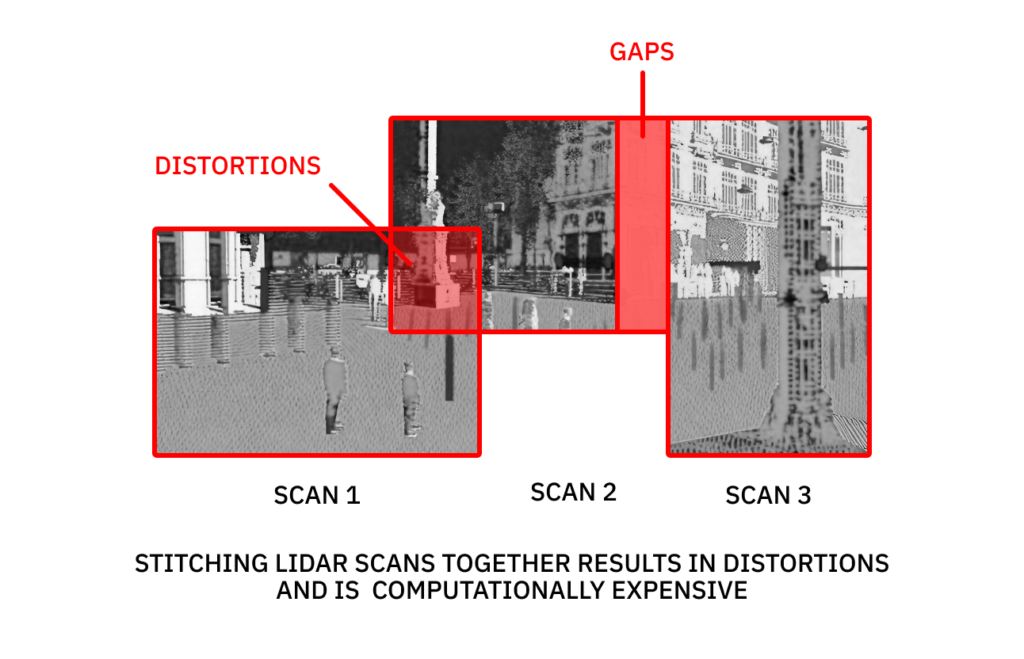

Any developer working on these types of projects knows that LiDAR point clouds of the same area captured by different sensors will produce disjointed data, usable after first manually stitching them together. Only then can developers begin creating a complex suite of hardware, software, and algorithms that align the data points to each other (local referencing) and the rest of the Earth (global referencing), resulting in a unified reference dataset that precisely represents the physical world.

Traditionally, developers use Simultaneous Localization and Mapping, or SLAM, which aims to estimate sensor poses and reconstruct traversed environments.Using traditional methods to stitch together just two disparately captured LiDAR point clouds is resource-intensive, but nowhere near the effort required to produce HD maps at the scales needed for global solutions. If stitching together only two scans can take a developer a few seconds time, stitching together a map of an entire city to safely and accurately power AVs could take years. As high-precision maps and LiDAR data increasingly fuel complex solutions at global scales, developers need a tech stack that enables them to build with absolute accuracy quickly and efficiently.

Changing the Way We Georeference Objects Using LiDAR

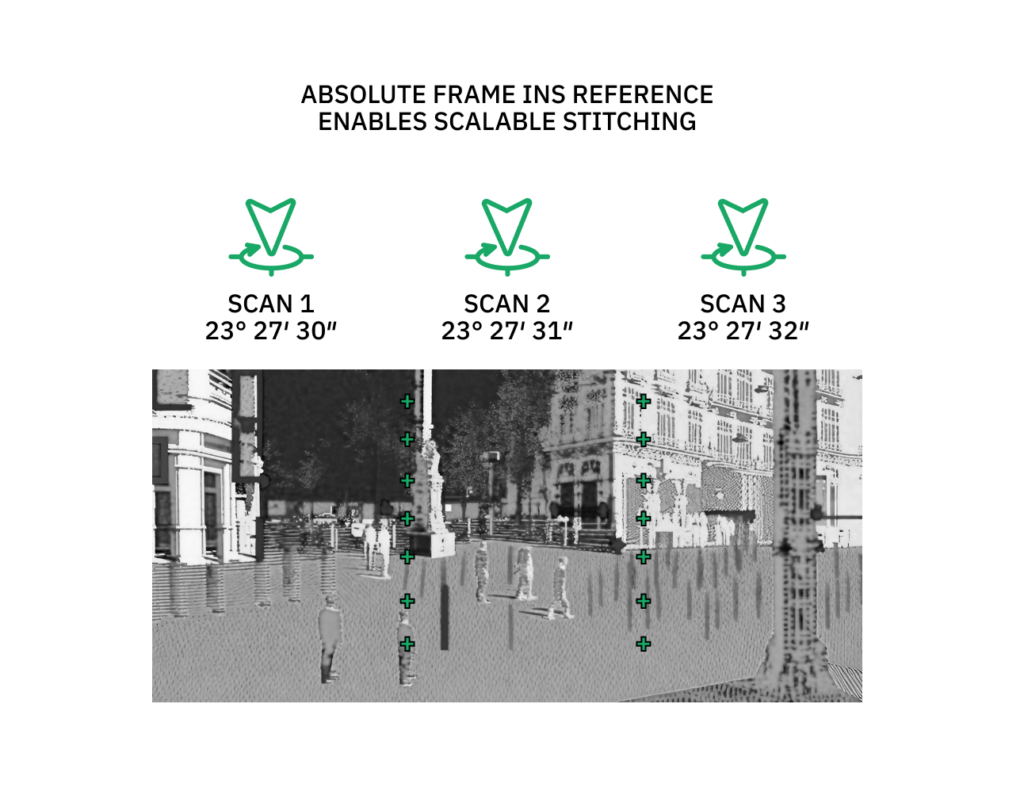

Recent advancements in GNSS solutions are facilitating the high-precision global referencing developers need, eliminating the need to stitch together disparate sensor data. Point One’s Atlas is an end-to-end inertial navigation system (INS) that leverages high-precision sensor fusion libraries to deliver accuracy ranging from 10cm to 1cm, and is easily integrated with ROS for streamlined application and product development. This drastic increase in precision is thanks to Polaris, Point One’s RTK correction network that models additional sources of GNSS error to provide map alignment 100x better than standard GNSS.

To demonstrate how developers can use this high-precision data to globally georeference LiDAR point clouds and produce a seamless reference frame, the computer vision team at Point One dedicated an afternoon to developing a proof of concept. First, the team collected data from a Point One Atlas INS. Atlas leverages Point One’s Polaris RTK and FusionEngine libraries to capture positioning data with centimeter-level accuracy derived from the industry’s most precise GNSS cloud correction service. They then integrated Point One’s FusionEngine API with ROS to enable the sensor data to be both locally and globally referenced. The resulting data produced a unified, globally referenced 3D map across an area of a city block without using SLAM. You’ll notice from the video above, that the output data is a clean map with minimal fuzziness or overlap.

Because this demonstration registers data to an absolute frame at every time step, there is no drift that usually encumbers SLAM using scan matching alone. Providing the globally referenced data as an “initial guess” for SLAM algorithms, will dramatically cut compute required for place recognition, loop closing, and overall optimization. We expect that this approach will dramatically cut operational costs related with map creation and updates.

Unlocking Opportunities to Rapidly Scale Global Georeferencing

In just one afternoon, Point One’s engineers were able to develop a scalable tech stack for globally georeferencing point clouds without data stitching. While this was an internal research and development project, the results show how accessible high-precision GNSS solutions, easily integrated with commonly used frameworks like ROS, can dramatically reduce the time and effort needed to develop a universal reference frame for global mapping. This enables developers worldwide to augment traditional data stitching methods, unlocking exciting opportunities for innovation at scale.

The following are just a few examples of solutions developers can build leveraging Point One’s Polaris, Atlas, & ROS tech stack:

- Path planning: Centimeter-level accuracy map data is essential to power navigation systems in not only AVs, drones, and robotics products, but also provides deeper insights to inform logistics and delivery fleets, precision agriculture, and transportation networks

- World mapping & measurement: Global georeferencing LiDAR data at scale eliminates the need to survey for position, replacing traditional mapping methods that require ground reference points or interpolation

- Progress monitoring:Physical details captured by LiDAR and rendered digitally while retaining accuracy can be used to monitor construction sites, land use, and any other area stakeholders need to observe regularly

- Mining operations: Data captured by sensors in open pit mines can be easily georeferenced to enable engineers to take volumetric measurements against reference points in real-time

And of course, the simplification and accessibility provided by this tech stack means LiDAR hobbyists can easily create living models of whatever they capture data for, whether it be their own neighborhood or a race track.

Getting Started With Point One’s High-Precision GNSS Solutions

Thanks to the latest in GNSS technology, it only took the Point One team an afternoon to build this flexible tech stack that eliminates the need for developers to manually stitch together disparate LiDAR datasets. The best part? This workflow is easily repeatable by developers working on similar projects. Instead of devoting hours to an extremely tedious process, developers can now focus on building innovative solutions with confidence in the precision of the data they are using.

Making the latest advancements in technology accessible to developers through easy integrations is critical, particularly in the geospatial industry which holds so much potential for having a positive impact on real-world problems. Point One makes it easy for developers to build their own tech stack by offering Polaris RTK and FusionEngine APIs that are easily integrated with common systems like ROS, as well as easy-to-use hardware like the Atlas INS to capture data points with precision time and location.